New Mexicans for

Science and Reason

Speakers and Fun

Science!

Updated 6 March 2009

While many of the NMSR web pages are devoted to

"debunking" various fringe or pseudoscientific beliefs,

NMSR also promotes fun and fascinating science for its own sake.

Many of our speakers describe their work at NMSR meetings, and

this page is devoted to descriptions of interesting research

presented to NMSR.

February 2009 Meeting: Dr. Anne H. Weaver on "The E-word: Teaching Evolution in the Land of Enchantment"

Anne H. Weaver, Ph.D. (author of The Voyage of the Beetle) spoke on "The E-word: Teaching Evolution in the Land of Enchantment." at our February 11th meeting, a celebration of Charles Darwin's 200th birthday (Feb. 12th). Starting with some recent education statistics, Anne showed that acceptance of evolution is lower in the USA than in all other developed countries, except for Turkey. Anne found that 18% of New Mexico 4th graders achieve proficiency in science compared to the national average of 27%, and that 18-19% of New Mexico 8th graders achieve proficiency in science compared to the national average of 27%. Dr. Weaver also showed that science comprehension falls markedly between 3rd and 8th grades.

When the question "What is the Scientific Method?"was asked of Santa Fe's Prospective School Board Members, the answers revealed a striking lack of science literacy:

"Ha. That's a good one. (Long pause.) The scientific method. I would guess that it would be deductive learning: being able to break it down, follow a formula." (Martin Lujan, Incumbent Board President, 8 years on School Board);

"Ok, well, the scientific method would be a method where you look for the facts, if I remember from elementary school. That's about as close as I can get to that. I haven't been to school since 1976." (Frank Montano, Incumbent)

"Any answer would be too much of a guess. I'm running for oversight and management and leadership, not professional education. That's not our mandate, as far as board members go. So you can say my answer is 'N/A.'…" (Peter Brill, running in District 5).

Anne described what factors correlate with student proficiency: monolingual cultures, societal attitudes and expectations, student self-confidence, availability of adequate resources, teacher preparation, unified curriculum aligned to clear standards, and an emphasis on principles and core ideas. As to the importance of evolution in science, Dr. Weaver cited Scott & Branch, 2008: "A teacher who tries to present biology without mentioning evolution is like a director trying to produce Hamlet without casting the prince."

Anne talked about how religious beliefs figure into the "debate," and discussed the Discovery Institute's "Wedge Strategy." The new terms being used by "Intelligent Design" advocates now include "both sides," "critical analysis," "strengths and weaknesses," "fairness," "academic theory," and Intelligent Design "theory." Anne showed that the policy of the state of New Mexico is strongly worded against allowing Intelligent design. Anne then moved beyond the question of religion to the broader question, why is creationism popular with children, even those of free-thinking or atheist families? She identified several onceptual "Sticking Points": naïve creationism, naïve essentialism, and naïve attribution of intentionality. Speaking of how children learn, she said that children's explanations for life's origins are usually one of these: creationist, spontaneous generation, or evolution. In fundamentalist communities, creationist responses predominate in every age group ; but even in in nonfundamentalist communities, the youngest children (Grades K-2) favored creation and spontaneous generation in equal numbers; the middle group (Grades 3-4) favored a creationist explanation; and the older group (Grades 5-7) and the adult group favored creation and evolution equally. Anne said the root cause was the notion of "Essentialism" - "…children …[treat] members of a category as if they have an underlying "essence" that can never be altered or removed. Essentialism … may even discourage children's learning of evolutionary theory…" (Gelman, 1999)

How can we reach these children? We need to find innovative and interesting ways to get the kids to grasp the fact that Individuals Vary; Populations Evolve. Anne described some engaging acting-out methods for teaching about variation. An audience member described a lesson in which a big bowl of candy corn mixed with M&Ms was passed around, with instructions not to eat the candy corn. After many rounds, there will still be some M&Ms -- the yellow and red ones, which camouflage well with candy corn.

NMSR thanks Anne Weaver for a splendid Darwin's Day talk. The annotated bibliography of her sources is posted on the NMSR Web Site. Check out her book, "The Voyage of the Beetle" (UNM Press).

Selected Bibliography (Annotated)

- Graph showing Acceptance of Evolution by Country: Miller, J.D., E.C. Scott, and S. Okamoto, Public Acceptance of Evolution. Science, 2006. 313 (5788): p. 765 - 766.

- Early acceptance of natural selection theory in America: Humes, E., Monkey Girl: Evolution, Education, Religion, and the Battle for America's Soul. 2007: Ecco. (p. 47)

- TIMMS Scores for 4th and 8th graders: Average Science Scores of Fourth- and Eighth-grade Students, by Country: 2007, U.S. Department of Education. National Center for Education Statistics, Editor. 2007.

http://nces.ed.gov/timss/table07_3.asp

- NAEP (Nation's Report Card) Scores for 4th and 8th graders: Student Percentages at NAEP Achievement Levels: Performance of NAEP Reporting Groups in New Mexico: 2005, U.S.D.o.E. National Center for Education Statistics, Editor. 2005.

http://nces.ed.gov/nationsreportcard/pdf/stt2005/2006467NM8.pdf

- New Mexico Standards Based Assessment Results: Change in Student Proficiency, School Years 2004-2005 to 2006-2007, Assessment and Accountability Division/Information Technology Division/NM Public Education Department. Department, Editor. 2007.

- Religious Affiliation in New Mexico: U.S. Religious Landscape Survey: Religious Affiliation: Diverse and Dynamic (PDF), PEW Forum on Relilgion and Public Life. 2008, PEW Research Center.

- Creation-Evolution Continuum: Scott, E.C., The Creation-Evolution Continuum. National Center for Science Education, 2008.

http://ncseweb.org/creationism/general/creationevolutioncontinuum/

- How Students Learn Science in the Classroom: Donovan, S.M. and J.D. Bransford, Eds., How Students Learn Science in the Classroom, Board on Behavioral, Cognitive and Sensory Sciences and Education, Behavioral and Social sciences and Education: National Research Council.

- Cognitive factors in Creation & Evolution beliefs: Evans, E.M., Cognitive and Contextual Factors in the Emergence of Diverse Belief Systems: Creation versus Evolution. Cognitive Psychology, 2001. 42: p. 217–266.

- Concept Development in Preschool Children: Gelman, S.A., Dialogue on Early Childhood Science, Mathematics, and Technology Education: A Context for Learing: Concept Development in Preschool Children, AAAS. 1999.

- Recommended Reading: Weaver, A., George Lawrence, Illustrator. The Voyage of the Beetle. 2007, Albuquerque: UNM Press.

- Further Reading: Weiner, J., The Beak of the Finch. 1995, New York: Vintage Books.

November 2007 Meeting: Dr. Gary Overturf on "The Issue of Thimerosal in Vaccine: The Science and the Hysteria"

Dr. Gary Overturf is a professor of Pediatrics and Pathology at the University of New Mexico's School of Medicine. He spoke about the hysteria that has developed in some circles regarding the alleged causation of autism by mercury-based disinfectants in vaccines at NMSR's November 14th meeting.

Gary mentioned a creationist he got in an argument with over 50 years ago, in Deming, NM. "People who want to believe, will," he said. He added that the same thing is happening with today's autism/vaccine scares, and warned that some 50,000 potential lawsuits may actually end up destroying many vital health programs. Vaccinations are the Number 1 medical success story of the 20th century, he said. In the United States in the early 20th century, there were almost 200 thousand cases of diphtheria annually. In 2001, however, the number of cases in that year numbered just two. Similar successes have been obtained for smallpox, pertussis, tetanus, measles, mumps and many more diseases.

Dr. Overturf then turned to toxins in the environment. "Everything, organic and non-organic, is potentially toxic! Even water or bovine milk are toxic enough and lethal if given in high enough dose," he said. Besides dose of toxins, other key factors include variations in animal or plant species, available metabolic pathways, and the duration and routes of exposure. Mercury, for example, can be absorbed into the body by inhalation, ingestion, skin contact, via intravenous treatments, or by injections. By far the most important consideration, he said, was "Dose, Dose, Dose!!!" Toxicity is most directly related to the dose of the toxin.

Mercury can be found in both inorganic and organic forms, he said. Inorganic mercury occurs in elemental or metallic forms, or as mercurous or mercuric salts, whereas organic mercury occurs in carbon-bonded compounds such as ethyl and methyl mercury. At high doses, mercury and mercuric compounds are well established nephro-toxins (affecting kidneys) and neuro-toxins (affecting the nervous system). He said that neurodevelopmental effects have been demonstrated at low doses, but only for pre-natal exposures, not for post-natal exposures. It's difficult to perform controlled mercury-exposure experiments on humans, because we know it's indeed toxic at high doses. If metabolized, both forms of organic mercury (ethyl and methyl) affect the kidneys, whereas direct exposure to organic mercury has the most effect on the central nervous system. Mercury can inhibit protein synthesis, which is why it's a problem in kidneys.

Thimerosal, a thiosalicylate salt of ethyl mercury, is used as a disinfectant on some vaccines. It is about 50% mercury by weight, but is added to vaccines in concentrations of just 0.003% to 0.01%. An average dose of mercury as a preservative in vaccines is around 17 µg (micrograms, millionths of a gram), about as much mercury as is found in a 5 to 6-ounce can of tuna. In cases where mercury treatments on bread and rice did cause severe health problems, the exposures are on the order of 2 to 3 milligrams (thousandths of a gram) per kilogram of body weight, or about 200 milligrams (or a fifth of a gram) for a 70-kilogram adult.

Before thimerosal was removed from infants' vaccines in 2000 because of concerns for its possible effects on infants, the typical series of vaccinations given in the first six months of an infant's life resulted in a total exposure of 100 to 200 µg of mercury. Of four agencies having requirements for exposure limits, three allowed this much, with room to spare (the Agency for Toxic Substances and Disease Registry, ATSDR, under Health and Human Services (HHS) and the Center for Disease Control (CDC); the Food and Drug Administration (FDA); and the World Health Organization (WHO). While these organizations were worried about infant 6-month exposures to over 300 or 400 µg of mercury, only the Environmental Protection Agency (EPA) had a guideline of under 200 µg, specifically 106 µg. Because of EPA's concerns, thimerosal was eliminated from infant vaccines in 1999.

Something else has changed in the last few decades, and that was the very definition of autism itself. The old diagnosis of autism was expanded to include several disorders, now collectively called Autism Spectrum Disorders (ASD). There is considerable overlap between ASD and retardation; for example, Fragile X syndrome, normally the most common cause of mental retardation, is now found to also be associated with ASD. Because the definition of "autism" was expanded, the reported incidence of autism increased, and ASD is now found in about 6 individuals per thousand (or 1:166). Other diseases now associated with ASD include tuberous sclerosis, PKU, Rett syndrome, Smith-Lemili, and Opitz syndrome.

While many of the conditions related to the onset of ASD have been identified with environmental exposures to the fetus in the womb, especially in the 2nd trimester of pregnancy, no data have shown that children with ASD have increased environmental exposure to mercury. In fact, Dr. Overturf said that the incidence of autism has continued to "increase" over the last seven years, despite the removal of thimerosal in vaccines during this time interval. Only one paper, published in Lancet in 1998 by A. Wakefield et. al., supports a thimerosal/autism link, and this paper has since been retracted by the journal and all of the authors except Wakefield. Because the Wakefield study has been vigorously championed by several well-intentioned (but misguided) special interest groups, a large segment of the population (over 50% of parents surveyed) believes that thimerosal preservatives do produce autism in infants.

Several other studies have provided evidence against a relationship between thimerosal and ASD, including large studies in the United Kingdom (Andrews N. et. al. & Heron, J. et. al., Pediatrics 114, 2004, with 109,863 children studied over 1988 to 1997), California (JAMA 2001; 285:1183-1185), a CDC study on 124,720 infants (phase 1) and 16,717 children (phase 2 re-evaluation) (Verstraeten T., et. al., Pediatrics 112:1039, 2003). The latter study found "… no consistent significant associations were found between thimerosal-containing vaccines and neurodevelopmental delay." The National Academy of Science has done three detailed studies (two in 2001, and another in 2004), again showing no linkage between thimerosal and ASD.

Professor Overturf concluded by stating that in the future, some other toxin, perhaps even one with some form of mercury, may be found to be associated with higher levels of ASD; however, many thorough studies have firmly shown that thimerosal vaccines are not responsible. The "alarming" increase in autism is not due to increased exposure to toxins in the environment, but rather to the re-definition of ASD itself.

A lively Q&A session followed. Asked about radio commercials for a Santa Fe doctor claiming to have cured autism with hyperbaric oxygen therapy, Gary said the only medical application for such therapy is for scuba-diving accidents, and that there is no "magic cure" for autism, which often is associated with genetic factors.

NMSR thanks Dr. Overturf for a splendid presentation.

July 2006 Meeting: Dr. Al Zelicoff on "One Flu out of some Cuckoo's Head: Why Pandemic Influenza isn't a problem (unless you are a chicken).

Al Zelicoff, a physicist/physician formerly at Sandia, spoke on "One Flu out of some Cuckoo's Head: Why Pandemic Influenza isn't a problem (unless you are a chicken)."

Al began by asking "What are the major international disease problems?" He identified Biological Weapons (a special problem), Pandemic influenza (not this time), and novel diseases (Hantavirus, Pertussis, Anthrax, etc.). He wondered "Why do we always get it wrong? And what can we do about it?" We gave "gotten it wrong" on numerous occasions - SARS, Monkeypox, Anthrax, Influenza shifts, and Tularemia, to name a few. And that's the ones we know about - what else is there? Why do we get it wrong? It's a combination of no data, bad science, poor statistical understanding, and failure to learn from history, Al said. He decried the lack of awareness of statistics among many medical doctors, who often generalize a few observations of a particular syndrome over their careers into "In case after case after case..."

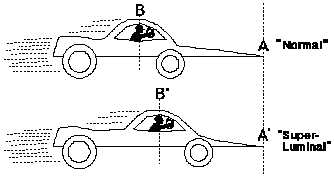

The recent Bird Flu Pandemic Scare was officially kicked off by a cover story in Foregn Affairs magazine. With the words "The Next Pandemic" in huge red letters, the accompanying articles by Laurie Garrett got major media all excited about the imminent "pandemic." Al noted that a "pandemic" means global distribution of a disease, and not necessarily the disease's severity. Al proceeded to explain why the present threat is nothing like the 1918 Spanish Flu, which killed millions.

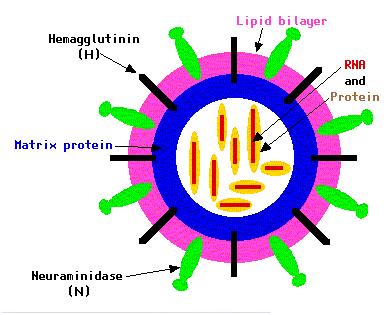

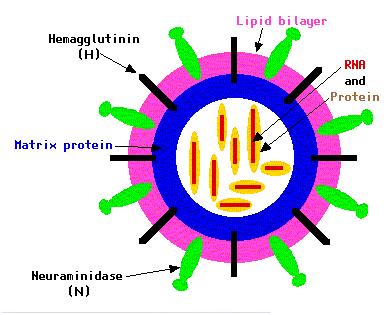

Zelicoff first described influenza nomenclature. Take the cryptic name A/ Hong Kong/ 68 (H3N2) - what do all the tags mean? For Flu, the Type is referred to by letter (A in the example), the location where identified is next (Hong Kong), followed by the year identified (68) and the "subtype" (H3N2). This last is key to understanding why the pandemic isn't what it's been cracked up to be. The "H" in the subtype refers to Hemagglutinin, a protein that binds the virus to the surface of a respiratory cell, attaching to cialic acid residues. These help the virus invade cells. The "N" stands for Neuraminidase, which cuts the bonds with H, and allows the virus to escape the cell, ready to infect another.

The common wisdom is that only immune system responses (antibodies) to the "H" proteins provide protection, and thus new antibodies are needed for each "H" variant. Thus, immunity to the Spanish Flu virus of 1918 (H1N1) would be useless in the presence of the new "Bird Flu" (H5N1). That common wisdom turns out to be wrong, fortunately for us! If someone has immunity to H1N1, and is exposed to H5N1, that virus will be blocked by the immune system as it tries to escape infected cells. People with antibidies for H1N1 will be protected from H5N1.

Zelicoff described Flu Pandemics over the last century. The big one, the 1918 Spanish Flu, was an extreme case, exacerbated immensely by the extremely crowded conditions in war hospitals, trenches, and so on.

In uncrowded conditions, a virus that agressively attacks and kills its host will be left alone to die before it can find a new host. Thus, uncrowded onditions favor non-aggressive strains of virus. But if conditons are very crowded, agressive strains can find an advantage: as the victim coughs his dying breaths, he can infect those next to him, and so on. Crowded conditions like the abysmal mass hospitals of World War I actually selected for more virulent and deadly strains.

Since 1918, several flu strains have impacted the world: H0N1 in 1933 (no pandemic), HxN1 in 1947 (no pandemic), H2N2 in 1957 ("Asian Flu", moderate pandemic), H3N2 in 1968 ("Hong Kong" flu, mild pandemic), and again in 1976 (Fort Dix, no pandemic). H1N1 returned in 1977 (the "Russian Flu," and was pandemic, but mainly for those under age 25 - i,e, those who had never been exposed to H1 or N1 in their lifetimes. In 1996, H5N1 ("The Bird Flu") appeared, and has not been pandemic. In 1998, H7N7 and H9N9 appeared (but no pandemic).

The threat to humans from H5N1 is small. Many have immunity by virtue of previous exposure to N1; millions have developed new immunities to H5N1 itself. The real threat of H5N1 is to chicken populations, which are held in crowded pens rivalling any of the horrors of WWI.

Because of the way chickens are often housed, for them, the Bird Flu is a serious threat indeed. But the hype about a human pandemic is extremely overblown, and detracts from truly important problems.

One reason for the "Sky is Falling" panic is that some felt we're "overdue" for a new pandemic. But looking at the actual data shows that no predictable pattern of pandemic periodicity exists. Other beliefs behind the "Bird Flu" hysteria include fears of an antigenic shift in the "H" gene, perception that there's a lack of antibodies for "H5", and insistence that antibodies to H5 are required for protection. Since some humans have gotten ill with H5N1, and since travel is widespread, we're two-thirds of the way to the pandemic, or so the story goes. Instead, immunity to H5N1 is already spreading through human populations. [On August 1st, 2006, Al sent word that "A CDC study released on Monday (8-1) 'suggests it might be more difficult for the deadly avian fly birus to spark a pandemic than originally feared.'"]

We should be scared of outbreaks of certain old (and new) diseases, Al said. We've done a miserable job on diseases which turned out to have very little impact, like SARS, but only with lots of luck (and some key observations by Dr. Bruce Tempest) was a serious outbreak of Hantavirus avoided right here in New Mexico. Then there was the case of detection of Tularemia in Washington, D.C. on Sept. 24-25, 2005. This was followed by reports of pneumonia in people who had been on the Mall in protests on those days. But, there was no surveillance, no phone calls to hospitals (nearby or out-of-state), and sloppy diagnoses. We don't have a clue what happened.

What we need, Al said, are some political and international agreements, some technological solutions, and some simple public health solutions. Monitoring is key. Al's company has developed a system, SYRIS, that is being used in Texas to create real-time communication between public health officials, private and public physicians, and (very importantly!) veterinarians. SYRIS simplifies the reporting process - it's only used on a very few patients exhibiting unusual symptoms, but when it's needed, it only takes 15 to 30 seconds for a report to be filed. SYRIS allows these data to be tracked in real time, and provides alerts to doctors if required.

Al summarized his points by stating that novel diseases are a certainty, are unpredictable, and usually associated with animals; we need better models for spreading of diseases, even common ones; international cooperation is needed; and real-time, geographic-based data collection is very much needed.

NMSR thanks Al Zelicoff for a stirring presentation.

January 2005 Meeting: Brian Sanderoff on "The Science of Polling"

At the Jan. 12th meeting, NMSR heard Brian Sanderoff of Research and Polling, Inc., on "The Science of Polling." Sanderoff's group does polling for the Albuquerque Journal, KOAT TV7, and many other groups. Polls can cost anywhere from 8 to 15 thousand dollars, and up to 35 thousand for advanced political efforts. Research and Polling, Inc. (hereafter R&P) has about 30 full-time pollsters, and sometimes also needs external phone banks of up to 200 callers, depending on demand.

R&P does some qualitative work (like focus groups), but mainly performs quantitative polling. The latter can be done by telephone, door-to-door, by mail, or by the internet. Each method has its own pro's and con's. It's easy to generate a large, random sample with telephone surveys; high response rates can be achieved, and sampling error can be calculated precisely. The phone format allows for top-of-mind image or awareness questions also, but has its own problems (telephone poll burnout, for one). For polling about things like job satisfaction, however, self-administered mail surveys can have some advantages. Respondents can be anonymous, everyone in the Universe (e.g. the Corporation or Division, etc.) can be polled, answers can be written out leisurely and thoughtfully, issues can be ranked, written responses may be more candid, and so on. This method works best when the Universe being polled has a vested interest in the endeavor (such as a hospital's physicians). Internet polling can have very fast turnaround times at very low costs, but are less reliable because of non-random sampling bias, self-selection bias, and the representativeness of the "sample." For example, the answers to the question "Do you Google?" will be very different in an Internet poll than in a random telephone survey.

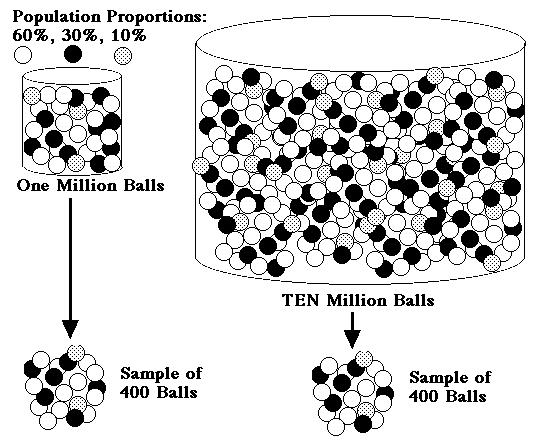

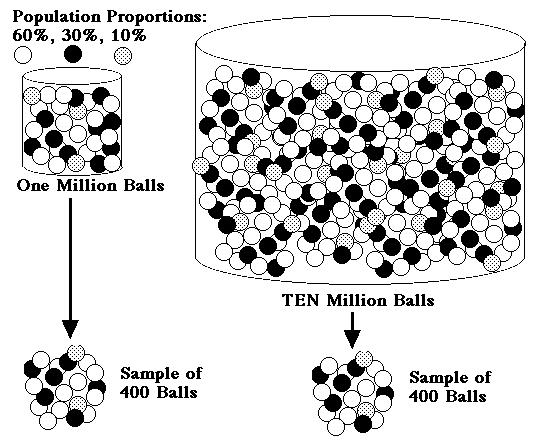

Brian explained how the "plus-or-minus" figures given for errors in polls depend not on the population size, but on the sample size instead. It's not the size of the Universe (New York City versus Rio Rancho), but the sample that counts. The basic principle is shown in the figure below.

Poll accuracy depends on sample size, not on population size. If both of the two different-sized populations of colored balls above (one million versus 10 million) have the same proportions of colored balls, then samples of 400 balls drawn from either the one or the 10 million containers will be similar in proportion of colors. Using less than 400 in a sample would increase the polling error, and more than 400 would decrease it. However, the polling error in a sample of 400 is the same if that sample is from tiny little Torrance County or from the whole United States, given reasonable polling conditions.

Brian mentioned the simple formula that describes polling errors: for a 95% Confidence Interval (meaning that you get a wrong result only once out of 20 trials on average), the Standard Score is 1.96 standard deviations. For worst-case error, the expected split is 50/50 (use the last two presidential elections as examples). If the number to be sampled, N, is about 400, then the "Maximum Sampling Error" is SQRT(%For * %Against /N)*1.96 = v(0.5*0.5/400)*1.96 = about 4%. For N = 200, the error is ±7%, while for N=1000, the error is ±3%. If the decision is lopsided (say, 90%/10%, then the sampling error is reduced: for N=400, a 90% / 10% split would yield expected error of v(0.9*0.1/400)*1.96 = about 2.4%.

Brian spent a lot of time talking about polling problems, such as biases. Major biases include low response rates, under-represented demographics (like young adults with cell phones only), language, content, social stigma, respondent expectations, behavior versus attitude, and so on. There are various remedies for these biases, such as ensuring demographic quotas, multiple callbacks, and so forth. Cell phones, the Internet, and the "Do Not Call List" mindset are all changing the playing field of modern pollsters.

Sanderoff made exactly the right number of handouts (30), and predictions versus results were discussed for the recent general election in New Mexico.

In the Q&A, the importance of question preparation came up. Brian agreed that formulation of good questions was a key priority for a good poll. He also discussed "Push Polls," in which pollsters try to influence rather than test. While legitimate pollsters might ask questions like "Does X seem like an effective argument to you?", "Push" pollsters might say things like "Would you still vote for X if you knew he had a DUI? Was a child molester?"

A lively discussion ended the evening. NMSR thanks Brian Sanderoff for a fascinating discussion.

February 2004 Meeting: Professor Barbara Forrest, Darwin Day 2004

New Mexicans for Science & Reason (NMSR) hosted Professor Barbara Forrest (Southeastern Louisiana University, Department of History & Political Science ), on "Darwin Day 2004" The free meeting was held on Valentine's Day (February 14th), at the UNM Law Building, and was co-hosted by the New Mexico Academy of Science (NMAS) and the Coalition for Excellence in Science and Math Education (CESE). The meeting came two days after Darwin's actual birthday (Feb. 12th), so that our speaker could make the long trip from Louisiana over the weekend.

Barbara began by saying she was speaking to us as citizens, a role which unites all of us regardless of profession. By "we," she said, she meant citizens concerned with protecting public science education and church-state separation, those who value civic friendship instead of divisive religious politics, and those who regard secular, constitutional democracy as the protector of, not an obstacle to, personal religious freedom in whatever form chosen to exercise it. "We are people who will work hard to protect personal religious freedom, but will work just as hard to keep personal religious preferences from becoming public policy," she said, adding that Intelligent Design (ID) is very much a religious and political movement, and that its proponents are working to get their personal religious preferences enacted as public policy in the nation's schools. Barbara said this goal is called "teaching the controversy."

To explain why the discussion of ID needs to take a new direction, Prof. Forrest reviewed what the ID movement has not done, and also what they are doing. What they have not done, she said, was science. "The ID movement has produced no science in the twelve years since the Wedge's coalescence. Qualified scholars and working scientists -who have produced the science that Wedge scientists have never done-- have critiqued ID and amply demonstrated that it is not science. Indeed, the ID movement is not truly about science. Phillip Johnson confirmed this as early as 1996, when the ID movement formally organized as the Center for the Renewal of Science and Culture: 'This isn't really, and never has been, a debate about science. It's about religion and philosophy.' Johnson cites the Gospel of John as the biblical foundation of intelligent design."

Barbara also said that ID creationists demand equal consideration for ID as an "alternative theory" in the name of "fairness, but that "fair" does not necessarily mean "equal." And, she said, their demand ignores the point that teaching children real science is fair, whereas allowing them to be pressed into the service of the Discovery Institute's religious/political agenda is not. Forrest said we must therefore keep one spotlight focused on the fact that, after twelve years, ID is a scientific failure.

Prof. Forest discussed the underpinnings of ID "science" (irreducible complexity, empirical detection of ID, etc.), and also the leaders of this political movement (Phillip Johnson, William Dembski, Michael Behe, Jonathan Wells, and others from Seattle's Discovery Institute). She discussed the ID onslaught on school boards, and the use of clever buzzwords like "teach the controversy," "teach strengths and weaknesses," "teach objective origins," "teach the alternatives," "teach critical thinking," "academic freedom," and so on, all used to disguise ID's actual creationist foundation. Barbara discussed recent ID activity in Darby, Montana, and said that while ID creationists have always lost big, they spin everything as a victory and press ahead with their agenda anyway.

Prof. Forest discussed the underpinnings of ID "science" (irreducible complexity, empirical detection of ID, etc.), and also the leaders of this political movement (Phillip Johnson, William Dembski, Michael Behe, Jonathan Wells, and others from Seattle's Discovery Institute). She discussed the ID onslaught on school boards, and the use of clever buzzwords like "teach the controversy," "teach strengths and weaknesses," "teach objective origins," "teach the alternatives," "teach critical thinking," "academic freedom," and so on, all used to disguise ID's actual creationist foundation. Barbara discussed recent ID activity in Darby, Montana, and said that while ID creationists have always lost big, they spin everything as a victory and press ahead with their agenda anyway.

Barbara made a special point to forestall any thought that her position is anti-religious. "ID creationists constantly charge that if one is pro-evolution, one is also anti-religion, or that if one is for secular democracy and education, one is automatically against religion. This is a misconception of the word secular, she said. Many evangelical leaders are shifting toward the idea that "secular" means "anti-religious." "In their view, if an institution such as government or an academic discipline such as science does not explicitly incorporate religious belief, that institution must be understood as overtly hostile to religion. In short, secularism is rejected by people who want their religious beliefs sanctioned as public policy. But secular means non-religious, not anti-religious. Secularism is a political concept vital to constitutional democracy, and we must rehabilitate the concept as such in the public mind," she said, adding "The ID agenda is an anti-secular movement. It is another column in the Religious Right."

Barbara gave many examples of the religious underpinnings of the ID movement, including groups like Friends of the Family, the Eagle Foundation, and others. ID is one of many fronts in a "culture war" that is being waged by Religious Right fundamentalists. She stressed the exclusionary nature of the religious outlook of many ID leaders; for example, Phillip Johnson demonstrated ID's religious exclusionism by slandering the religious faith of Catholic evolutionary biologist Kenneth Miller: Johnson said "The only reason I have to believe that Kenneth Miller is a Christian of any kind of that he says so. Maybe he's sincere. But I don't know that. If he is, I can say this: you often find the greatest enemies of Christ in the church, even in high positions. There is a kind of person who may be sincere in a way, but is double-minded, who goes into the church in order to save it from itself by bringing it into concert with evolutionary naturalism, for example. And these are dangerous people. They're more dangerous than an outside atheist, like Richard Dawkins, who at least flies his own flag. So I am not impressed that somebody says that he is a Christian of a traditional sort and believes that evolution is our creator. This is, at the very least, a person whose mind is going in two directions, and such people often do a great a damage within the church."

In effect, the ID movement wants their strict, narrow view of God, as one who would never stoop to using evolution, to be mandated as official government policy. This is patently unfair - not just to atheists and agnostics, but also to the many religious people who believe evolution was simply God's method of creation. Barbara mentioned several intellectual believers who have found delight in reconciling their views of science and religion, such as evangelical Christian Keith Miller's new book, "Perspectives on an Evolving Creation." Barbara stressed that there need not be the type of conflict that ID is creating.

Prof. Forrest said that ID proponents are our fellow citizens, and under the Constitution they are entitled to their exclusionary religious views and their dislike of secularism. But, she said, they are not entitled to have those views enshrined as public policy.

Barbara concluded by reflecting on the long and successful marriage of Charles and ??? Darwin, and wishing everyone a Happy Darwin Day and Happy Valentine's Day. She closed with this, from Darwin's "The Descent of Man" : "Females . . . prefer pairing with the most ornamented males, or those which are the best songsters, or play the best antics; but it is obviously probable that they would at the same time prefer the more vigorous and lively males, and this has in some cases been confirmed by actual observation."

March 2003 Meeting: The

Sci-Fi Channel Roswell Dig

New Mexicans for Science & Reason (NMSR) heard Dr. William

Doleman (UNM, Office of Contract Archaeology) speak on "The Sci-Fi

Channel Roswell Dig." The meeting was held on March 12th, 2003, in

the NM Museum of Natural History and Science. Doleman was prominently

featured on the Sci Fi Channel's November 22nd, 2002 airing of the

documentary "The Roswell Crash: Startling New Evidence," hosted by

Bryant Gumbel. (See the March/April 2003 edition of Skeptical

Inquirer for Dave Thomas's review of the Sci Fi show.)

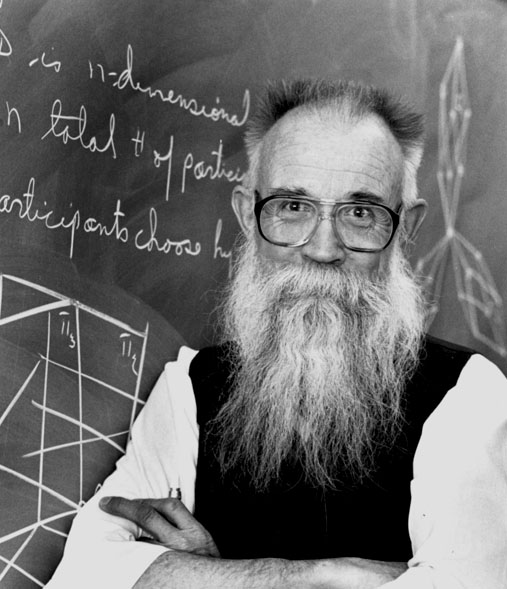

Bill Doleman (Photo by Dave Thomas, copyright 2003)

Doleman said he was a bartender and a free-lance writer before

becoming interested in archaeology. His field, contract archaeology,

accounts for 90% of all money spent on archaeological work. Many of

the contracts involve federal mandates such as the Historic

Preservation Act of 1966. Contract archaeologists study the cultural

impacts of land development or government projects by determining if

the land has any sites that might qualify to be on the National

Register. Candidate sites may qualify based on location (such as

battlefields), persons who lived there (Washington's home), crafts

(such as pottery) or significance with regard to history or

prehistory. Doleman mentioned Kennewick Man as an example of a

far-fetched claim (namely that this man from many millennia ago is

ancestral to Native Americans now living in the same area). UNM's

Office of Contract Archeology (OCA) competes with several private

outfits for contracts for what are essentially cultural equivalents

of environmental impact statements.

In 1999, Doleman's group was approached by UFO researchers Don

Schmitt and Tom Carey, and was asked how he would study a "UFO" site.

Doleman laid out a budget for a small project, but it cost too much,

and he didn't hear from Schmitt and Carey again for three years.

Then, in 2002, they returned, this time with backing from the Sci Fi

Channel. Doleman met with Larry Landsman, the Sci Fi Channel special

projects director, and prepared a budget and test plan. The crew

began field work at the purported Roswell crash site on September

16th, 2002, and stayed about 9 days. Attendee Ken Frazier asked if

there had been discussion within UNM about being connected to

"paranormal crap," but Doleman said there was nary a peep of discord

until much later, when Target 7's Larry Barker began asking UNM

officials about the OCA's involvement with the Sci Fi show. Doleman

said he wasn't there that night to defend what the Sci Fi Channel

did.

His team's goals were: o search for evidence of a low-angle impact

by .. something; o use reported (anecdotal) observations to decide

where to perform the search; o survey the site for debris, or a

"furrow" or "gouge." The show advisors, Schmitt and Carey, chose the

location for the "Roswell Dig." Doleman said author Kevin Randle also

approved the location for the site. Doleman said the rationale for

doing the dig was that they had a Contract. The Roswell "Incident" is

culturally and economically important, both in New Mexico and

world-wide. He said the bigshots at UNM said the work was

appropriate.

Doleman said the incident should really be called the "Corona

Incident," but that Corona didn't have a sheriff, and so Roswell got

all the fame. But, until now, there hasn't been a search for real

physical evidence. He said Schmitt and Carey were brave to put their

ideas to the test. Two million people watched the show, and a sequel

is planned, he said. For now, everyone's happy, Doleman said- Schmitt

and Carey stay on the world's radar, MPH Entertainment gets a nice

gold star, and UNM'S OCA gets paid.

Doleman talked a bit about archaeology as the forensic science of

the past. Clues in sites today can tell archaeologists much about the

past, such as whether the original inhabitants used stone boiling or

pit roasting. The processes that shape sites can be divided into

cultural (C-transforms) and natural (N-transforms). Had they found

the remains of an Air Force balloon experiment, that would have been

a C-transform.

What were the results of the Roswell Dig? Doleman said they were

"more than I expected, less than they [Sci Fi Channel] hoped

for." From aerial photographs, pre- and post-1947: there was no

furrow. In the electromagnetic conductivity survey, there was no

furrow. From the High-resolution Metal Detection Survey: no obvious

debris. From Archaeological Testing (pits, soil samples, etc.), there

was no obvious debris, 25 HMOU's (Historic Materials of Uncertain

Origin), and 66 soil samples. Backhoe trenching provided one anomaly,

a V-shaped feature that might have been a "furrow," but that mostly

disappeared when scraped. Doleman said he's written this off "about

95%" now, but still.... There was also a soil stratigraphy study. An

"alternative furrow" was found, but interest in this fizzled when it

turned up on 1946 aerial photographs (from a year before the Roswell

Incident). They also found a beat up old weather balloon, but one

only ten or so years old, not fifty.

The "Furrow" (photograph courtesy & copyright 2003

William Doleman)

A lively question and answer session followed the talk. NMSR

thanks Bill Doleman for an informative lecture.

See Also: Bait and Switch

on 'Roswell: The Smoking Gun’

January 2003 Meeting:

Molecular Evolution

New Mexicans for Science & Reason heard Dr. Rebecca Reiss (New

Mexico Tech) on " Molecular Evolution: Studies in Microbes, Middens,

and Man" at our January 11th meeting. Rebecca began by discussing her

work on xenobiotics -- bacteria that can metabolize exotic compounds

like the dangerous pollutants called dihalogens, which include

ethylene dibromide (EDB) and ethylene dichloride (EDC). Reiss

mentioned there are 175 sites with such pollutants in New Mexico,

described with the acronym LUST - Leaky Underground Storage Tanks. In

Reiss's pilot project, levels of EDB and EDC have been monitored at

selected LUST sites in New Mexico, and the levels of these compounds

have been seen to decrease over time. The best explanation is that

it's biotic - bacteria are breaking down these dangerous compounds,

and performing a valuable public service. But the rates are quite

slow. How can science speed up this process?

That's where the two fields of genomics and proteomics comes in.

Genomics is the study of DNA, the genetic material, and its RNA

products. RNA, in turn, directs the assemblies of legions of amino

acids into long chains called proteins, which are the molecules of

both structure (like bone) and activity (like enzymes for digestion).

The study of how the amino acid chains fold up and interact with

other molecules is called proteomics. So, the question of

understanding bioremediation of such compounds involves identifying

the particular species involved (and many species of bacteria are

lurking there), isolating the responsible genes, or isolating the

actual proteins. Rebecca discussed PCR (polymerase chain reaction),

which can be used to "amplify" tiny snippets of DNA into measurable

amounts. This technique is very useful, but it has its pitfalls - for

example, any contamination (DNA from, say, a human handler) can also

be amplified to yield a false signal. Reiss's group has studied using

16SrRNA primers to isolate the xenobiotic genes of the

dihalogen-consuming bacteria. Another approach is to study the

proteins directly, but proteins can't be amplified like genes can

with PCR. But, you can examine banded protein stains from pools of

hundreds of bacteria, and perhaps even deduce the key proteins for

the reaction. If you can, then duplication of the proteins in mass is

quite practical. Also, the amino acid sequence can be converted into

a DNA sequence, and appropriate DNA primers can be developed, which

will lock onto the corresponding genes in the bacterial genomes.

Besides the impressive biology, there are many difficult technical

issues involved, such as filtering bacteria from solution, growing

bacteria that refuse to be cultured, asnd so forth.

Rebecca also discussed work with middens (dung heaps near houses),

and how molecular markers could assist with archaeological studies.

She mentioned her work on human evolution and NUMTS

(Nuclear-localized mitochondrial DNA's). Our cells have many

mitochondria outside the nucleus, busy helping us get enough energy.

But, occasionally the DNA from one of these energy powerhouses (which

probably were a stand-alone microbe before their assimilation long

ago into our eukaryotic cells) can migrate right into the nucleus,

where its rate of mutation/evolution slows down. When we compare our

DNA with that of other humans, or other primates, we can observe many

different NUMTS, because this migration process is still ongoing.

NUMTS can be used in forensic analysis; there are so many that

comparison of several can indicate how closely related given test DNA

is to that of an individual or group.

NMSR thanks Rebecca Reiss for a delightful presentation.

November 2002

Meeting: Structure of the Universe

Trish Henning

Trish Henning

New Mexicans for Science & Reason heard Associate Professor

Trish Henning (UNM, Director, Institute for Astrophysics) on "The

Structure of the Universe." The meeting was on November 13th. Trish

started by mentioning the distribution of mass. Where is it? What is

the difference between luminous mass and so-called "dark matter"? We

can see nearby galaxies (Andromeda in the northern hemisphere, the

Magellanic Clouds in the southern), and in the last few decades, we

have learned quite a bit about the distribution of galaxies through

the universe. One of the big surprises was the discovery of the local

super-cluster about 50 years ago. Since then, better and broader

surveys have been performed, revealing huge voids between clusters of

galaxies.

From the 2dF Galaxy Redshift Survey, showing voids and

structure over billions of light years

Trish talked about how the expansion of the universe is used to

estimate position of galaxies from observed speed, which is given by

the Doppler shift of galactic emissions. Because gravitational

interactions can act against the motion of expansion (locally, at

least), clusters of galaxies tend to get smeared out in Doppler-shift

plots. Trish talked about the difficulty of seeing galaxies through

the disc of the Milky Way, and how radiotelescopes use the hydrogen

21-cm line to penetrate galactic dust. She talked about ongoing

galactic surveys, including the one she works on in Australia, and

New Mexico's Sloan Digital Sky Survey. It looks like we're finally

able to discern the scale of galactic clustering across the

universe.

NMSR thanks Trish Henning for a delightful presentation.

October 2002 Meeting:

Panel Discussion on the "Language Gene"

New Mexicans for Science & Reason heard a Panel Discussion

featuring Distinguished Biology Professor Randy Thornhill (UNM),

Retired Linguistics Professor Hank Beechhold, and Anthropologist Ted

Cloak, on Implications of a New-Found "Language Gene" (re Svante

Paabo's and colleagues' research on FOXP2). The October 9th meeting

got started with a brief introduction by Dave Thomas. It all begins

with an extended family (the KE family), half the members of which

exhibit a severe hereditary language disorder. The disorder involves

unintelligibility, trouble forming plurals, and more, and even shows

up in physical brain scans. From genetic studies of family members, a

gene, FOXP2, was found to be the likely culprit of this language

disorder. Later, Paabo's team compared FOXP2 of unaffected humans and

several other primates, and found that the normal human form of FOXP2

was different from that of chimps and the other apes, and other

mammals as well. Earlier this year, FOXP2 was heralded as a "Language

Gene" distinguishing man from the apes.

The first panelist, biologist Randy Thornhill of UNM, stressed

that neither genes nor environment alone determine the development of

traits, but rather the interaction of the two (the Interactionist

Perspective). Both environmental determinism and genetic determinism

have been tossed on the trash heap, he said. Evidence supports the

now accepted Interactionist model, and so one can't consider any

given trait as more environmental than genetic, and vice-versa.

Language has important social causes, like learning, but it also has

physical aspects involving muscles, vocal cords, and so forth. There

was no question genes were involved before FOXP2's discovery, Randy

said, it's that we didn't know which of the tens of thousands of

genes affected language. And many genes are involved. The interesting

question is not whether or not language is biological, Randy said; we

knew that already! It is not whether evolution did it, but which

evolutionary process did it. It could have involved direct selection

for the trait or traits, or indirect ("coat-tails") selection.

Genetic drift is out as an explanation because language is much too

complicated. Language shows design, the hallmark of adaptation. It

can be used to communicate, to deceive, or to manipulate. Because

language involves multiple adaptations, Randy said, it clearly isn't

due to indirect selection. Language is directly selected. We pay

attention to it; it has become a Fitness Indicator. Randy mentioned

his work on symmetry, and his view that facial and body symmetries

are likewise fitness indicators. (This was the topic of our June 1997

meeting, and is reported in the July 1997 NMSR Reports). Thornhill

also spoke to NMSR on biology and rape at our May 2000 meeting (June

2000 NMSR Reports).

Biologist Randy Thornhill

Biologist Randy Thornhill

Panelist Hank Beechhold, a retired professor of linguistics, spoke

about the origin of language. We don't know what form the first

language took, he said. Some have speculated it developed from

imitating animal sounds ("Bow-Wow Theory"), other sounds (:Ding-Dong

Theory"), or perhaps grunts. Language is related to consciousness

itself, Hank said, and consciousness itself is related to language.

Hank's second point was that "language" is much harder to define than

most people think. Communication? Even single-celled creatures

communicate with their environment. Is language merely "A means of

phonetic communication shared by groups of humans?" What then of

writing, or signing? No, speech is simply one medium or channel, not

language itself. Therefore, Hank said, language precedes speech, as

competence precedes performance. While FOXP2 does affect speech, Hank

said, there are undoubtedly many other genes involved. Hank mentioned

an African gray parrot studied by Pepperberg, who found the bird able

to actually communicate verbally at the level of a young child. It

would be foolish to lay all language at the feet of FOXP2, but it's a

player. Perhaps it was selected for some property like facial

expressions after language and speech had appeared. Hank concluded by

mentioning that adult Neanderthals had vocal tracts like those of

young infants, and that their ability to articulate speech would have

been quite limited. Was this related to Neanderthal extinction? Did

Neanderthals have FOXP2? Is FOXP2 related to speech, or to language?

(At this point, it was suggested that we might try to get some

Neanderthal DNA and check it for FOXP2 differences.)

Linguistics professor Hank Beechhold

Linguistics professor Hank Beechhold

The final panelist, anthropologist Ted Cloak, commented that the

panel was turning out to be more of a love feast than a hotbed of

controversy. Like the other two speakers, Ted said FOXP2 is a

language gene, but not the "language gene." Ted described the KE

family, and the nature of their language disorders. One problem they

have is generalizing about number, gender, or sex. For example, some

words are easy to pluralize (dog, dogs), but others have special

rules (sheep, sheep, or cow, cattle). The affected members of the KE

family see every pluralization as a "special" case. They have to

learn that dogs are the plural of dog, and also, that cats are the

plural of cat, and so on for all nouns.

(At this point, someone suggested that people who had trouble with

tenses or plurals, or maybe even those who say "nuke-u-lar," might

soon be described as "FOXP2 challenged.") Ted discussed how the gene

might have been changed by a selective sweep and fixation event, but

that this hasn't been proved yet. What would happen if the chimp

FOXP2 was somehow injected into a human? It wouldn't necessarily be

the same as what happens in the KE family, because their genetic

defect is a mutation of the working human gene, and might not be

"chimp-like" at all. Of course, we can't put chimp DNA into a human

child, so we'll have to figure it out some other way. Ted again

emphasized that FOXP2 may be a player, but it's not the only one.

Language is not a necessary precursor to culture; it arises as

enhancements of culture. One of the first critical human capabilities

was fine imitation. Ted's conclusion was that culture most likely

preceded language, not the other way around.

NMSR thanks panelists Thornhill, Beechhold, and Cloak for a most

interesting discussion.

March 2002 Meeting: Cody

Polston & Bob Carter on Ghost Hunting

by Dave Thomas : nmsrdaveATswcp.com

(Help fight SPAM! Please replace the AT with

an @ )

New Mexicans for Science & Reason (NMSR) heard Cody Polston

and Bob Carter of the Southwest Ghost Hunters Association (www.sgha.net)

speaking on "Hunting for Ghosts" on March 13th, 2002.

Cody Polston

Bob Carter kicked the evening off by saying that the SWGA group is

interested in finding out what causes ghostly visions, even if there

is a prosaic explanation. One of the possibilities they are looking

at is that visions of specters might be caused by abnormal

electromagnetic fields, as has been hypothesized by Michael

Persinger. Cody Polston noted that photographs alone cannot prove

ghostly visitations, and that even negatives can be faked. Cody

mentioned that the group's bylaws prevent them from using psychics in

their investigations, as you cannot use one paranormal phenomenon to

validate another. This attitude has earned SWGA criticism from other

ghost groups, which routinely employ psychics in their

investigations. He described a curious incident they investigated in

Texas, where cars left parked on a train track are mysteriously

"pushed" out of harm's way, with small handprints visible in flour

particles dusted onto the rear of the car. However, it's a gravity

hill, and the motion of the car is no real surprise. Furthermore, the

group found that when the flour is put on the car, it reveals

handprints that were already there, made visible because of oils and

residue left behind. This did not make them popular with the Texas

ghost group.

The group does "investigations" once or twice a month, and employs

some low-tech magnetic field sensors to look for odd fields. They

typically measure from 5 to 40 Hertz only. Cody and Bob described

some of the curious events they have observed, such as glasses

blowing up at Maria Theresa's restaurant, flashing lights at La

Placita, weird knocking at the Church Street Café in Santa Fe,

and sliding chairs at Los Ranchos de Corrales restaurant. Cody noted

that even if the group could prove that photographed "orbs" were real

phenomena, it's still a big leap to prove they are ghosts.

All in all, it was a friendly encounter. Cody and Bob do think

there may be something to ghost stories, and that's what keeps them

going. But, they don't declare that every fuzzy blob in photographs

is a "ghost," either. The group could use help with basic

electromagnetics and ideas for sensors, if anyone wants to help out.

Their investigations are open to those who want to tag along.

NMSR thanks Cody and Bob for an interesting talk.

February 2002 Meeting:

Stuart Kauffman on Investigations

by Dave Thomas

New Mexicans for Science & Reason (NMSR) heard Stuart Kauffman

of the Santa Fe Institute and BiosGroup speaking on "Investigations"

on February 13th, 2002.

Kauffman is a biologist and former professor of biochemistry at

the University of Pennsylvania and a professor at the Santa Fe

Institute; author of Origins of Order: Self Organization and

Selection in Evolution (1993), and coauthor with George Johnson

of At Home in the Universe (1995). He talked to NMSR about his

third book, Investigations.

Kauffman began by introducing the concept of "autonomous agent."

Is a bacterium, which could be said to act on its own behalf as it

gleans food from its environment, an autonomous agent? Stuart then

gave his definition of such an agent: "A self-reproducing system that

can do one thermodynamic work cycle." He raised and lowered a pen,

illustrating a work cycle, and thus demonstrated his own status as an

autonomous agent. The bacterium is also such an agent, of course. But

something that reproduces without work, such as a crystal, isn't an

autonomous agent. Even DNA is not an autonomous agent; it specifies

order, but does not perform work cycles. Kauffman mentioned that

Schrödinger predicted that life would require aperiodic crystals

bearing a code nine years before the elucidation of the structure of

DNA by Watson and Crick. Stuart said the question of what makes a

cell alive is still open.

One of the perplexing problems of the origin of life (not to be

confused with the subsequent evolution of diverse life forms over

billions of years) is that DNA codes for proteins (strings of amino

acids), but proteins are required to help copy the DNA. As yet, we

haven't been able to make a molecule that makes copies of itself

without the assistance of other molecules like proteins. It's hard to

get RNA (ribonucleic acid) to duplicate itself, because too many G's

and C's (guanine and cytosine nucleotides) make it fold up in knots.

But it's probably not impossible, Kauffman said. He mentioned

Gunter's research on a "cousin" of RNA, in which hexamers made copies

of themselves without other proteins to assist. Stuart also mentioned

work on a 32-amino-acid protein that breaks into smaller subunits (of

15 and 17 amino acids each), and how the subunits catalyzed the

production of more copies of the 32-amino-acid sequence. He said this

self-reproducing protein "blew the field wide open."

He went on to discuss systems in which components catalyze each

other, but not themselves. If two molecules A and B can catalyze each

other's formation, as has been demonstrated, why can't three? Or

four? Or hundreds or thousands? Kauffman called such assemblages

"autocatalytic systems." He talked about a button-and-thread model

for self-organization, in which buttons and threads are strewn on a

floor. Pairs of buttons are connected with the threads randomly. At

first, most of the buttons are free; there are a few pairs, and even

some triplets. But as more threads are added to given pairs, the

number of interconnections rises steeply. Larger clusters are joined

into still larger clusters, until BAM! - giant clusters are

formed.

Kauffman then introduced the concept of "adjacent possible." If

you have a bunch of molecules and reactions, what collection of

molecules will exist after one reaction step? There are many

possibilities, which constitute the immediate "adjacent possible."

For example, once there were just a few organic molecules; but now,

there are trillions. "The biosphere has expanded into its adjacent

possible," Kauffman said. Additionally, the number of ways to make a

living has exploded.

Stuart then discussed work cycles and energy. Work cycles are a

way to link spontaneous and non-spontaneous reactions. For example,

the hexamer (6-acid molecule) which catalyzes pairs of trimers

(3-acid molecules) to form more hexamers performs work cycles. It

"eats" energy (supplied as photons of light) and trimers, and

produces more hexamers like itself. The process can oscillate back

and forth, respond to feedback, and so on. Kauffman criticized the

standard definition of work as force times distance, and says he

prefers to define work as "the constrained release of energy." But

where do the constraints come from? Who makes the pistons in Carnot

engines? It takes work to make the constraints, and it takes the

constraints to produce work. Work can be non-propagating, like

shooting a cannonball into a field; while a hole and hot dirt are

created, no useful work is performed. But arrange the cannonball to

turn a paddle wheel as it zooms away, and that can be harnessed to,

say, pump water and irrigate the fields. This is "propagating" work,

he said.

In cells, the cells make lipids of different types that combine in

layers, producing vital cell membranes; these are the "constraints"

of the cell. And the cell makes copies of itself, providing closure.

Kauffman said it's not just a question of matter, energy, or Shannon

information: it is an organized system that propagates itself, a

"living" state of matter and energy.

Unlike physics, in which the "adjacent possible" states of, say,

atoms in a sealed vessel can be easily described, the biosphere is

not finitely pre-describable. As an example, Kauffman discussed

Darwinian pre-adaptations. We all have hearts, needed for pumping

blood. But what if the heart could have another as-yet-unanticipated

function? What if some one person's heart happened to be sensitive to

vibrations typical of impending earthquakes, and that this ability

caused the person to run to safety outside just before massive

earthquales can strike? That person might have more descendants than

others without sensitive hearts, and if there were many earthquakes,

that ability might spread to many descendants. We can't possibly

anticipate all the possible pre-adaptations, and so we can't even

define the "adjacent possible." Stuart suggested that a possible "4th

Law of Thermodynamics" for the biosphere is that it will expand into

the adjacent possible as fast as it can get away with it. But

Kauffman hasn't figured out how to mathematize it, because he can't

even list all the possibilities.

A lively question and answer period followed the donut break. In

response to one question, Kauffman cautioned that we don't really

know for sure what will happen because of genetically modified foods,

because we don't know for sure how those genes will interact with

others in the field. Paul Gammill asked about teaching "Intelligent

Design" in schools. Kauffman replied that design theorists have not

made their case to biologists, and don't deserve equal time in

schools, because the fact of evolution appears to be

incontrovertible. Gammill proceeded with a laundry list of questions

regarding chirality, complexity, and so forth. Kauffman said these

questions included some very good ones, and he provided some good

answers and areas for exploration. He said the Design Theorists need

to identify their Designer if they expect to develop their theory as

a science.

Stuart Kauffman concluded with a brief discussion of Maxwell's

Demon, a hypothetical construct that could appear to violate the laws

of thermodynamics by, say, allowing only atoms of a certain speed to

go though a hole connecting separate containers, thus changing the

state into one of disequilibrium. While no Demon can exist in the

domain of atoms alone, it's different in the biosphere. There,

Darwinian pre-adaptations can be "measured" by the biosphere (much as

the Demon would measure an atom's speed), and adaptations that are

favored in the environment can be "catalyzed." He concluded that "The

biosphere keeps finding new ways to measure displacements from

equilibrium, and how to get work from them."

NMSR thanks Stuart Kauffman for a mind-expanding talk!

November 2001 Meeting:

An Insight Into Relativity

by John Geohegan

Einstein's Special Theory of Relativity (SRT) is generally

misunderstood for several reasons. Most of us have no need to apply

its principles, nor do we have any experience with objects moving at

close to the speed of light. Even so, we would like to understand

SRT. Popular explanations refer to moving objects gaining mass and

shrinking in the direction of motion, and moving clocks running slow,

but these explanations are referring only to appearances in much the

same manner as statements about the sun rising in the morning, moving

eastward across the sky, and setting in the evening. We accept that

the sun appears to rise in the morning and we can calculate exactly

when the "rise" will occur, but a more accurate and lengthier

statement would deny that the sun truly rises and explain the

appearance by referring to the rotation of the earth.

Just as the formulas for calculating the sun's apparent motion are

likely to contribute nothing to understanding the reason for the

appearances, applying the formulas of SRT really doesn't reveal why

objects appear to contract and clocks appear to run slow. It's

perfectly possible to apply the set of relativistic equations called

the Lorentz transformation and obtain a correct numerical answer

without having a clue as to why the equations work so well. This is

number-crunching without insight.

The following example offers a simple way of gaining insight in

relativity.

Imagine two space ships have just passed each other and at the

moment of closest approach they have synchronized their clocks at

12:00. As they draw farther apart each sends out a flash of light

precisely as his own clock indicates each hour. Brian will receive

Alfred's 12:00 signal when his clock reads 12:00 because the signal

has no distance to travel, but Alfred's 1:00 signal will be received

at some time later than 1:00 depending on their relative velocity. To

make things easy, suppose the velocity is great enough that Brian

receives Alfred's hourly signals every two hours by his (Brian's)

clock. SRT tells us that the same conditions will apply to Alfred; he

will receive Brian's clock signals every two hours, at 12:00, 2:00,

and 4:00 by his (Alfred's) clock.

Consider the signal sent out by Alfred at 1:00. It is received by

Brian when his clock reads 2:00 and reflected back at the same time

to be received by Alfred when his (Alfred's) clock reads 4:00. Not

being able to see Brian's clock, Alfred calculates that the signal

must have reached Brian at 2:30 (halfway between 1:00 and 4:00). If

informed that Brian's clock actually read 2:00, Alfred might conclude

that it was a half-hour slow, and thus we see what it was that

Einstein discovered: measurements of time intervals depend upon

relative velocity. Of course, if Brian sends out a signal when his

clock reads 1:00, exactly the same values will be repeated and he

will see that Alfred's clock is apparently running slow. Without the

insight made possible by this simple example it's possible to

misinterpret SRT as claiming that A's clock is running slower than

B's at the same time as B's is running slower than A's.

The same example, slightly extended, can be used to explain the so

called "twin paradox", which is not a paradox at all. If after

traveling for two hours by his clock, Brian were to suddenly change

to a return trip at the same relative velocity, he would start

receiving signals at the rate of two per hour instead of one every

two hours. On his two hour return home he would thus receive four

more signals sent out by Alfred, arriving home just as Alfred was

sending his 5:00 signal. Alfred would have aged five hours while

Brian aged only four. This problem illustrates the relativistic

nature of time intervals.

It must not be thought that SRT deals only with some sort of

illusion. Einstein's theory unified electricity and magnetism and is

tested every day for meaningful predictions. The Global Positioning

System tests Einstein's predictions millions of times each day,

worldwide, and reveals the magnificence and practicality of

relativistic calculations.

The following images, by Dave Thomas, show how Doppler

measurements of the binary stars in system Castor C (in the

constellation Gemini) agree with Einstein's theory (speed of

light is constant invacuum), but do NOT agree with the

predictions of theories in which the speed of light depends on the

speed of the light-emitting source.

Expected Time/Velocity Curve of One Star of Castor C (Binary

System), if Einstein was RIGHT (Light travels at 299,792,458

meters/sec)

Expected Time/Velocity Curve of One Star of Castor C (Binary

System), if Einstein was WRONG (Light travels at c=299,792,458 m/sec

± motion of source);

here, Castor C is 5 light-years away. At orbital speeds of 0.0004c,

transit times will vary from 5 lightyears/(1-.0004 )lightyears/year =

5.002 years = 1827.0 days,to 5 lightyears/(1+.0004)lightyears/year =

4.998 years = 1825.5 days, or about 1+1/2 days.

Expected Time/Velocity Curve of One Star of Castor C (Binary

System), if Einstein was WRONG (Light travels at c=299,792,458 m/sec

± motion of source);

here, Castor C is 50 light-years away (about actual). At orbital

speeds of 0.0004c, transit times will vary from 50

lightyears/(1-.0004 )lightyears/year = 50.02 years = 18270 days,to 50

lightyears/(1+.0004)lightyears/year = 49.98 years = 18255 days, or

about 15 days.

The actual measurements of both of Castor C's binary stars look

decidedly like the first graph, and demonstrate very strongly that

Einstein was right. At the binaries' relatively small speeds, the

differences in calculated velocities between classical and

Einsteinian Doppler shifts is insignificant - only a few miles per

hour out of hundreds of times the speed of sound.

June 2001 Meeting: Global

Warming

by Dave Thomas

At our Wednesday, June 13th, 2001 meeting, we heard Dr. David

Gutzler UNM Earth & Planetary Sciences, speaking on "Global

Warming: Science and Policy Perspectives." Prof. Gutzler discussed

greenhouse warming, the current status of climate change research,

and the Kyoto protocol.

Gutzler first described the Greenhouse Effect, wherein sunlight

emitted by the 6000oK-hot sun passes through earth's

transparent atmosphere, is absorbed by the ground, and is re-emitted

as infrared radiation, which heats up the air. Almost all the energy

emitted by the ground is absorbed by the atmosphere in one way or

another. Gutzler emphasized that the Greenhouse Effect is not a

"villain," if there were no Greenhouse Effect, the earth's

temperature would be 33oC (about 59oF) cooler

than it is today, making the average temperature a chilly

255oK (-18oC, or about 0 o F). What

absorbs the infrared radiation? Nitrogen and oxygen gases

(O2 and N2) are not good absorbers, so 99% of

the atmosphere is not involved. Trace gases, including water vapor

(H2O), carbon dioxide (CO2), nitrous oxide

(N2O), and methane (CH4) are the key players.

Ozone (O3) is a player only for incoming sunlight - it

absorbs the ultraviolet well, but doesn't react to the infrared

radiation emitted by the earth. Gutzler presented data showing that

CO2 levels are increasing, from a pre-industrial level of

280 ppmv to 315 ppmv (1958), and 355 ppmv (1988). There is no

plausible explanation for this increase besides people, Gutzler

said.

Temperatures have been rising also, but that gets more

complicated. In the last century, temperatures increased by

0.6oC. (See American Geophysical Union's

EOS, Vol. 80, No. 39, September 28, 1999, p. 453,

"Climate Change and Greenhouse Gases" by Tamara S. Ledley, et.

al., online at http://www.agu.org/eos_elec/99148e.html).

Gutzler noted that the :Little Ice Age" was a European phenomenon,

not a worldwide one. Temperature records, based on oxygen isotopes,

overlay beautifully with CO2 levels, but is temperature

responding to CO2, or is CO2, responding to

temperature? For example, when earth's orbit makes the seas colder

(the Milankovitch cycle), they absorb more CO2. The

Greenland ice-core record, going back 100 thousand years, shows that

the last ten thousand years have been very stable, the most stable in

the last thousand centuries. Gutzler said he is worried because "We

shouldn't mess with a good thing."

We do understand some things quite well, like the Greenhouse

Effect. We know that CO2 levels are increasing due to

humanity; global temperatures are increasing; and carbon dioxide and

temperature are intimately connected in paleoclimate studies. Why is

there any debate? One source of uncertainty is climate monitoring: we

need to improve our climate observation system, for both present and

past changes. Our knowledge of the global CO2 cycle is

incomplete -- what happens to CO2 emissions? Some of it

goes to the oceans, and some to vegetation on the land, but we don't

know if it will become saturated in either reservoir. We need better

understanding of particulate pollution, which in many cases, such as

sulfates, mitigates warming. We don't understand climate

feedbacks well enough. For example, if there are a lot of clouds,

sunlight will be reflected, cooling the earth, but more infrared will

be re-absorbed, heating the earth. If there are more glacial ice

sheets, that reflects more sunlight, cooling the earth, creating more

ice sheets; as ice sheets retreat, less sunlight is reflected,

heating the earth and melting more ice. As water vapor absorbs more

heat, the temperature rises, allowing more water vapor to form, which

absorbs more heat, and so forth. The situation is so complicated that

we need models to study it. Climate forecasts are incredibly

difficult, and we've never predicted anything as complicated as this

before. In older models, many state-sized parcels of air were used to

represent the atmosphere, but clouds are much smaller than states,

and could not be accurately analyzed. Newer models have the cell size

down to about a degree of latitude (70 miles or so), but are still

far larger than typical clouds. We can model some large scale

phenomena like the trade winds, but are still having trouble with El

Niño.

Prof. Gutzler concluded his talk with remarks on the Kyoto

accords. The International Panel on Climate Change, IPCC, didn't

issue any conclusion in their 1990 report. By 1995, they concluded

there was a discernible effect of humanity on the climate. In 2001,

they declared that most of the warming of the last 50 years is

human-caused. Critics of the Kyoto accords point to discrepancies

between various models as proof that they are all incorrect, but

Gutzler noted that it is impossible for different models to agree

exactly, and that we need humility in the face of so much

uncertainty. The Kyoto protocol was based on the Ozone Depletion

treaty of 1992. Its goals for "Annex I" (developed) countries include

reductions of greenhouse gas emissions to at least 5% below 1990

levels by 2008-2012, with progress by 2005, and credit for

CO2 sinks (forests, for example). Goals for the 38 Annex I

countries include 6% reductions in Japan and Canada, 7% in the USA,

and 8% in Europe. There are no goals for non-Annex I countries, and

that's one of the sticking points. Fully 55 countries, which include

55% of the emissions, must ratify the treaty before it can take

effect; so far, no Annex I countries have ratified the

treaty.

NMSR thanks Dave Gutzler for a very interesting talk.

May 2001 Meeting:

Self-Assembling Materials

by Dave Thomas

At our Wednesday, May 9th, 2001 meeting, we heard Dr. Jeff

Brinker, UNM Physics Department and Sandia National Laboratories,

speaking on "Self-assembled materials ... From Dishwashing to

Nanostructures." Brinker is helping try out a novel collaboration

between UNM and Sandia. So far, he said, it's produced some

interesting results.

Brinker's goals are not overly ambitious. He simply wants to learn

how to control matter on the nanometer scale, devising methods to

impart life-like qualities to materials -- such as sensing and

responding to their environments. Jeff also wants to learn how to

make a material that can repair, replicate, or propel itself. The

tricky part is doing this at nano-scales, anywhere from one to 100

nanometers (nm, billionths of a meter). [For comparison to

familiar units, a 1-mil (1/1000 inch) garbage bag is a whopping

25,400 nm wide; the wavelength of yellow light is about 500 nm.]

Brinker's experiments are on such small scales that he needs a

Transmission Electron Microscope just to see what's happening.

At such small scales, photolithography, the method used to produce

intricately detailed integrated circuits, is far too bulky and

clumsy. With the wavelength of light many times larger than the

desired work area, it's like trying to thread a needle with a rope.

Brinker was thus led to study methods in which materials assemble

themselves.

One remarkable self-organizing material is common dish detergent.

Brinker said detergents are "pre-programmed for self-assembly."

Detergents are mode of molecules that are hydrophilic (water-soluble)

on one end, and hydrophobic (attracted to oils, but repelled by

water) on the other end. When detergent is added to water, and energy